On the Tangle, White Papers, Proofs, Airplanes, and Local Modifiers

With the research, development, and ecosystem around the IOTA project really beginning to flourish, and with nearly three years having passed since it was first announced to the world, I thought it would be a good time to reflect on how we got here. This blog post aims to shed some more light on the history of the IOTA project and why we have chosen the research and development approach that we have.

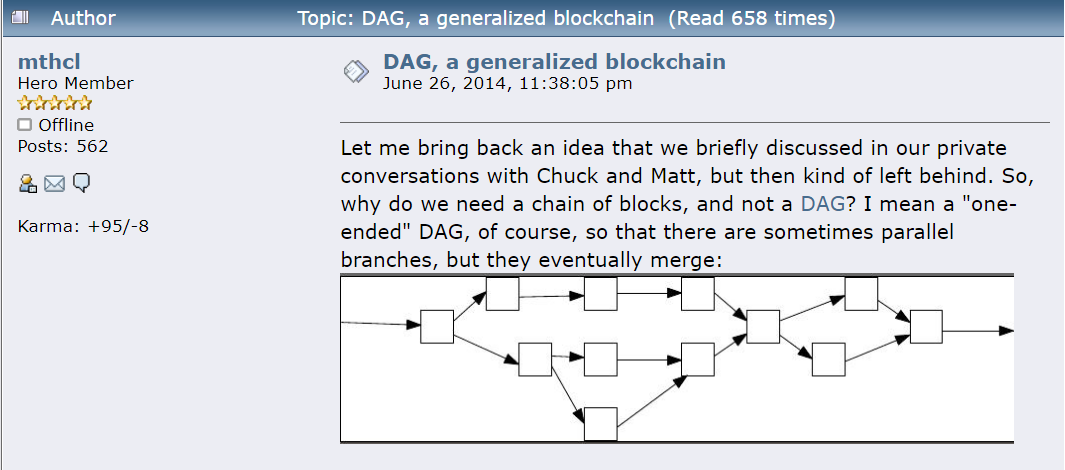

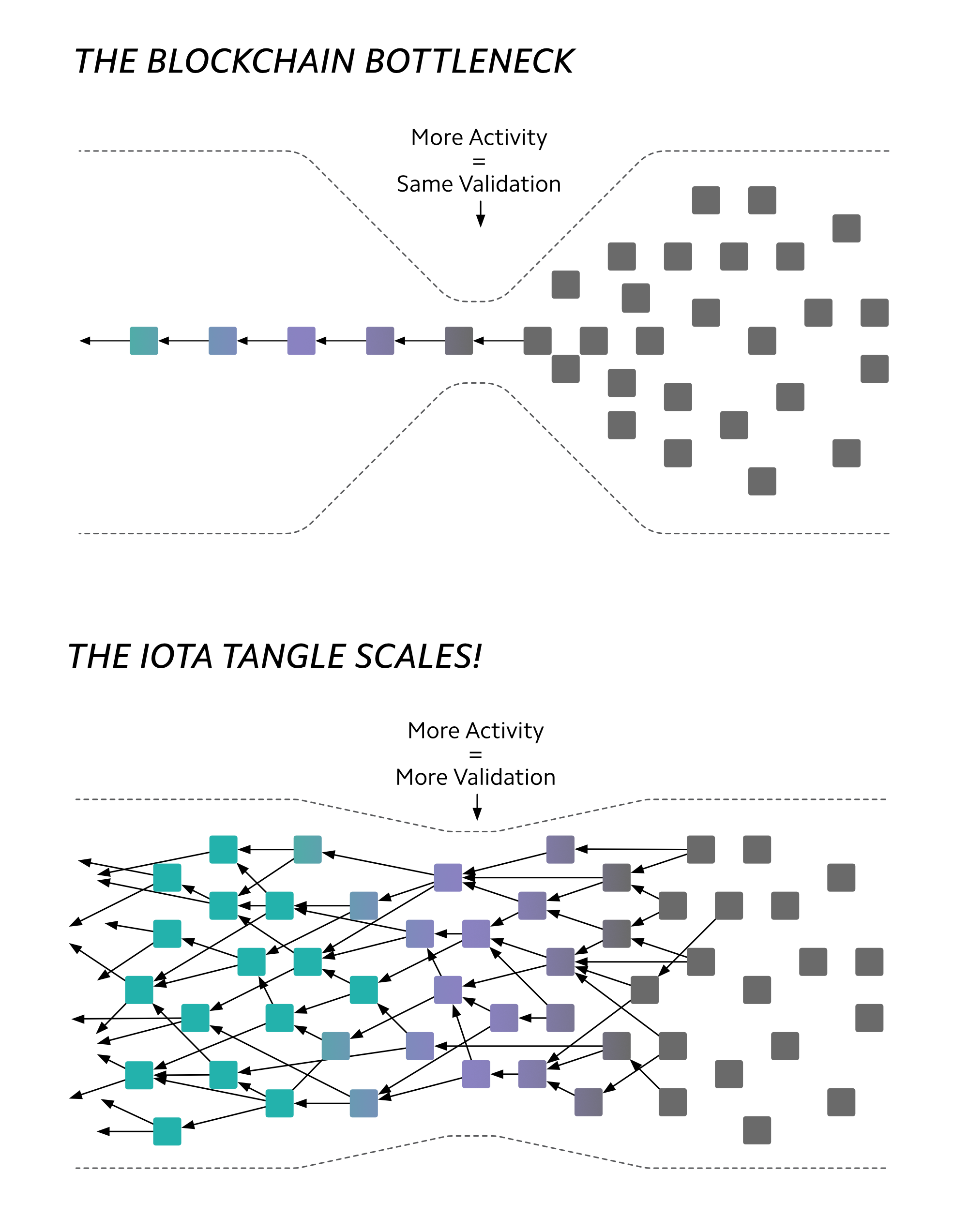

It was around four years ago when some of the ideas around a distributed ledger architecture based on a Directed Acyclic Graph (as opposed to a chain) began to be discussed on various Nxt and Bitcointalk forums. The blockchain scaling problem which really came to a head many years later was already well understood at the time. It is not incredibly hard to see that the chain of blocks of finite size, which can only be produced at regular discrete time intervals, produces a throughput bottleneck and leads to high transaction fees that need to be paid to the miners (which is by design). If you wanted to remove the fees and allow the system to scale, the natural idea would thus be to eliminate the bottleneck and the miners.

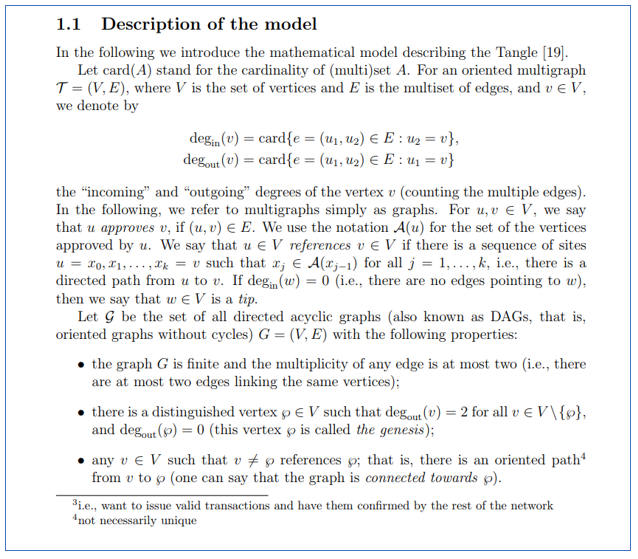

This is, of course, easier said than done — it raises all sorts of new questions. Where should the next block/transaction/vertex be attached? Who will vet the transactions for consistency and why? How can it be secure against possible attacks? How will consensus be achieved? These questions don’t have trivial answers. It was only after over a year of intense thought, discussion and deliberation that the IOTA founders came up with the idea for an architecture that could potentially resolve these issues. In this system, each transaction (represented by a vertex in the graph) would approve two previous transactions it selects using a particular class of random walk. This became the mathematical model we now all know as the Tangle and it serves as the foundation for the IOTA core protocol. It has been almost three years since the first draft of the Tangle white paper describing this mathematical model was published and it has since taken on a life of its own — the original white paper has gone through many revisions thanks to the many people who suggested numerous improvements (see the “Acknowledgements” section in the end — I take this occasion to give thanks to all people who provided invaluable feedback), it has been cited quite a few times in other research papers, and has spawned an ever-expanding number of follow-up papers written by both researchers at the IOTA Foundation and independent ones further investigating the properties of this unique architecture.

The goal was to create a decentralized system that could scale and eliminate transaction fees — no easy task. To eliminate the transaction fees, we had to first eliminate the miners — after all, if you want to design a feeless system, you cannot have a dichotomy of “miners” who serve the “simple users”. This bifurcation of roles between the miners and transactors naturally leads to transaction fees because the miners have some kind of resource that others don’t have and they will use this monopoly power to extract rents (in the form of transaction fees, block rewards, or both). Therefore, to eliminate the fees, all the users (i.e. you) would have to fend for themselves. Our idea was to come up with a system where the main principle is “Help others, and others will help you” (you can think of it as a pay-it-forward model).

You can help others by approving their transactions; others can help you by approving your transactions. Let us call the transactions which don’t yet have any approvals "tips"; all new-coming transactions are tips at first. The idea is that, by approving a transaction, you also indirectly approve all its “predecessors”. It is intuitively clear that to help the system progress, the incoming transactions must approve tips because this adds new information to the system. However, due to the network delays, it is not practical to impose that this must happen — how can you be sure that what you believe to be a tip has not already been approved by someone else maybe 0.1 seconds ago?

Instead, it is all about the approval strategies— sometimes (in fact, most of the time) we use the term “tip selection”, but it is important to note that the participants of the network are not obliged to select the tips; we would like to impose that everybody should do so, only we can’t. If everybody collaborates with everybody — only approving recent and good (non-contradicting) transactions, then we are in a good shape. On the other hand, for someone who only cares about themself, a natural strategy would just be to choose a couple of old transactions and approve them all the time without having to do the more cumbersome work of checking new transactions for consistency thereby adding new information to the system. If everybody behaves in this way, then no new transactions will be approved, and the network will effectively come to a halt. Thus, if we want it to work, we need to incentivize the participants to collaborate and approve each other’s recent transactions.

Yes, once again, in the end, it is all about the incentives. Everybody wants to be helped by others, but, not everybody cares about helping others themselves. To resolve this without having to introduce monetary rewards, we could instead think of a reward as simply not being punished by others. We need to slightly amend our main principle — it now reads: “Help others, and the others will help you; however, if you choose not to help others, others will not help you either”. When a new transaction references two previous transactions, it is a statement of “I vouch for these transactions which have not been vouched for before, as well as all their predecessors, and their success is tied to my success.” Unfortunately as humans, none of us can choose our parents and ancestry, but in the world of IOTA you can — so choose wisely 😉. In the IoT environment it is reasonable to assume that a good proportion of the network participants will follow the “recommended” (by whom? by m̶e̶ ̶C̶o̶m̶e̶-̶f̶r̶o̶m̶-̶B̶e̶y̶o̶n̶d̶ the IOTA Foundation, of course 😊) tip selection strategy. Our task is thus to design this strategy in such a way that it is advantageous for everybody to use it as well (provided that at least a good part of the others do as well). After many discussions and failed attempts, we concluded the Markov Chain Monte Carlo (MCMC) tip selection algorithm (more precisely, the family of tip selection algorithms) at the heart of the Tangle would have these properties.

The Tangle as a mathematical model is both very simple and incredibly complicated. It is very simple because there is only one rule which is really imposed: a transaction must approve (validate) two older transactions. It is incredibly complicated because the nodes of the network are free to choose these two transactions in any way they like, and this can make the topology (i.e. grosso modo, the way how the transactions are connected to each other) of the Tangle very messy. Even if we assume that everybody follows the same tip selection strategy, the resulting graph is random, with a structure that is quite difficult to access. As argued in the paper, a good choice of the tips selection strategy is doing a “random walk towards the tips” (a.k.a. MCMC) — so, mathematically speaking, we are dealing with Markov chains in random environments, and models of this sort are notoriously difficult. Believe me, Markov processes in random environments are one of the main topics of my “pure math” research, so I do know a fair bit about this.

If anyone thinks it is actually not so difficult — try to prove the stability of the number of tips for alpha=0. To be precise, assume that the tip selection is made by a simple random walk towards the tips(so it is independent of cumulative weights), and prove that the expected number of tips at time t is uniformly bounded by a constant. It is something that seems to be almost intuitively obvious, and it is indeed confirmed by simulations, but try to prove it rigorously. This can be done — my former student, Darcy Camargo, was able to do it just recently (a very impressive display of his mathematical prowess). And, if the above is too easy for you, then try to prove Conjecture 1.1 in Equilibria in the Tangle.

But on the subject of proofs — as you may know, I am a research mathematician working in the area of Markov processes and related things. I have proved many theorems in my life. Coming from me, this may sound particularly surprising, but still: do not overestimate the importance of mathematical proofs in the real world. OK, proofs are important, and, even more importantly, proofs are frequently beautiful. But, to prove anything, you need first to set up a mathematical model based on assumptions, and these models never reflect reality in a completely accurate way. The real world is too messy and complicated for these simplifying assumptions. The other founders behind the IOTA project and I have always appreciated that in order to engineer and build something for the real world, an iterative development process would be necessary, bootstrapping the development with certain safety rails along the way (e.g. the Coordinator), while continuously analyzing, critiquing and improving our understanding of the system. This is simply the nature of engineering. A protocol like IOTA doesn’t live on a piece of paper or on a whiteboard, but in the real world where many of the assumptions of an abstract mathematical model — the Tangle — fall apart.

Mathematical models, proofs and theorems can be extremely valuable, but it is also very important to appreciate their limitations. Any white paper which claims to have proven everything about the behavior of a system which will exist not as an abstract idea, but rather as a real-world implementation, is either clairvoyant or (more likely) just naive. One can try to write technical specifications and prove every aspect of the design and safety of an airplane, but until it has actually been built and you can see it fly, caution must be exercised before making any sweeping claims about its flight readiness.

Besides, even in the context of mathematical models, it is not unusual that a statement is widely believed to be true but no proof is known — there are numerous examples of these in mathematics and mathematical physics (also, may I humbly remind that all of today’s public key cryptography relies on unproven assumptions?). To complicate the situation just a little bit more, in our context quantitative results are needed. It is not quite enough to prove that, for example, “with probability 1, any transaction will be eventually confirmed”. To illustrate what I mean here, consider the following “proof” of the statement “Bitcoin is broken”: Indeed, given an address, one can just keep randomly guessing the corresponding private key; since there are finitely many of them, eventually one succeeds, with probability 1. 😎

Please don’t get me wrong: the above is not, by any means, a license to not care about proofs.

But when we don’t have a completely rigorous mathematical proof of a desired statement such as “The Tangle is provably secure”, (or maybe we do, but in the context of an oversimplified mathematical model), this means that we have to work hard to find nonmathematical ones, run many simulations of everything, construct other mathematical models which are more adequate and/or tractable, imagine every possible kind of attack on the system and figure out why they will not work (or figure out why they will and then make amendments to defend against them), and so on. Also, we need to incentivize the external research on all this (expect interesting news in this direction soon).

Speaking of amendments to the mathematical models, some of you may have noticed the paper “Local Modifiers in the Tangle” which recently was published on the Academic Research page at www.iota.org. It is a draft that has been circulating in the Foundation for several months; the version we posted was stripped of some serious-but-unfinished math and “internal-use” comments. The paper’s abstract reads:

As of today, there are many cryptocurrency systems around. They mostly share one common feature: all participants of the network interpret the same ledger in the same way. In this paper, we introduce the idea of local modifiers: the nodes of the network can interact with the ledger in different ways, depending on various kinds of information locally available to them. We then argue that such an approach permits to increase the security and the scalability of the Tangle.

Asof today, there are many cryptocurrency systems around. They mostly share one common feature: all participants of the network interpret the same ledger in the same way. In this paper, we introduce the idea of local modifiers: the nodes of the network can interact with the ledger in different ways, depending on various kinds of information locally available to them. We then argue that such an approach permits to increase the security and the scalability of the Tangle.

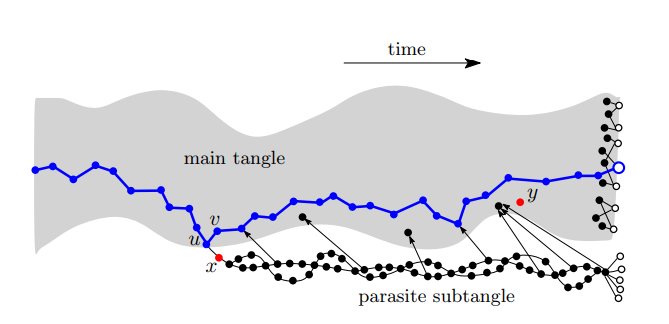

What we seek to do in that paper is to further strengthen the tip selection algorithm, introducing also the “local view of the nodes” (specifically, the time when a node received a particular transaction) into play. This approach seems to be quite effective against many attacks of the type “do-something-secretly-then-broadcast” (these include the double-spending “parasite chain” attack and also the “splitting attack” described in the Tangle white paper). It is important to stress that we do not seek to replace the MCMC tip selection algorithm, but rather, in the spirit of iterative development, to amend it in our never-ending pursuit to improve the protocol and make it more secure.

While there is still more research to be done on Local Modifiers before we can confidently say whether they are an improvement to the existing tip selection algorithm and that they will be incorporated into the recommended IOTA tip selection strategy, we share our research and development early and often in the spirit and ethos of open source. By doing so, we give an opportunity to our amazing ecosystem of researchers and developers to help contribute to the protocol’s evolution. Indeed, the IOTA project would be nowhere today if it were not for these contributions to the project from the IOTA Ecosystem.

Building a protocol to enable secure, feeless, and decentralized value and data exchange for the Internet of Things is not something that happens in a night, in a year or even three years. It is not something where all of the desired properties (security, “good” Nash Equilibrium, scalability, etc.) can be proven in a white paper. And it is not something that can be done in the Cathedral. The ideas described in the Bitcoin whitepaper were truly brilliant and it is absolutely remarkable to see what the manifestation of those ideas has become today. But even the great Satoshi Nakamoto (whoever he/she/they may be) failed to consider some crucial flaws in Bitcoin’s design. For us to achieve our vision to make IOTA the backbone protocol for IoT, we, the researchers and engineers at the IOTA Foundation, as well as all the independent researchers and engineers in the IOTA Ecosystem contributing to the project, must do everything we can to make sure the IOTA core protocol is truly built to last.

Like the Internet Protocol itself, the research on the IOTA protocol will continue, there will always be room for improvements, and it will need to evolve to meet the changing demands of a constantly evolving world. This is simply the nature of any protocol because a protocol is nothing more than a shared language — a commonly accepted set of rules to allow us to share information, and in the case of IOTA, also value. Like any language though, these are living things and they evolve over time. With the incredible team being assembled at the IOTA Foundation along with the burgeoning ecosystem of researchers, developers, corporations and other contributors to the project, we know we can build IOTA to meet the needs of the Internet of Things industry and help it to evolve to power the machine economy of the future.